Naked Statue Reveals One Thing: Facebook Censorship Needs Better Appeals Process

We at the łÔąĎÖ±˛Ą were reassured of one thing this past weekend: Facebook’s chest-recognition detectors are fully operational. A recent post of ours, highlighting my blog post about an attempt to censor controversial public art in Kansas, was itself deemed morally unfit for Facebook. The whole episode is a reminder that corporate censorship is bad policy and bad business.

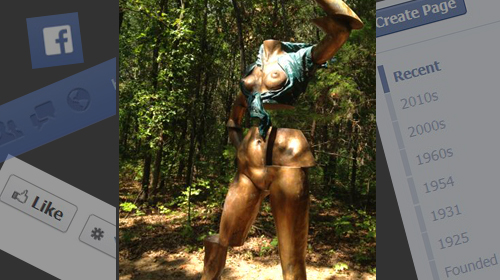

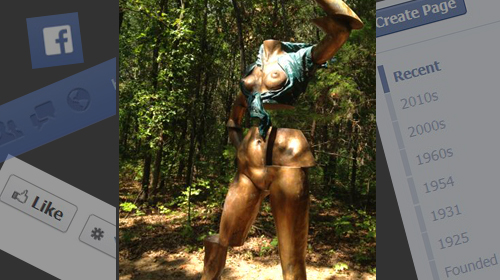

The blog is about a kerfuffle over a statue in a public park outside Kansas City: a nude woman taking a selfie of her own exposed bronze breasts. A group of citizens organized by the American Family Association believes the statue to be criminally obscene (it isn’t), and has begun a petition process to haul the sculpture to court (really, they are). Our Facebook post included a link to the blog post and a photo of the statue in question.

Our intrepid Digital Media Associate, Rekha Arulanantham, got word on Sunday that the Facebook post had been deleted, and was no longer viewable by our Facebook followers or anyone else. I duly informed my Kansas colleague that the photograph she’d taken had prompted a social media blackout. Then, astoundingly, on Tuesday morning Rekha discovered the łÔąĎÖ±˛Ą had been blocked from posting for 24 hours, with a message from Facebook warning us these were the consequences for repeat violations of its policy.

We were flabbergasted; we hadn’t tried to republish the offending post or the associated rack. So, just to get this straight: the łÔąĎÖ±˛Ąâ€™s post on censorship was shut down—not once, but twice—for including a picture of, and a political discussion about, a statue standing in a Kansas park.

Why Was Our Post about Censorship Censored?

Facebook’s notice told us that the post was removed because it “violates [Facebook’s] Community Standards.” While my blog did include a comprehensive slate of synonyms for “boobs,” it was the visual subject of the blog—the image of the statue itself—that triggered Facebook’s mammary patrol.

Look, we’re the łÔąĎÖ±˛Ą. Of course our Facebook posts are going to touch on controversial subjects—if they didn’t, we just wouldn’t be doing our jobs. We won’t ever (apologies in advance) post gratuitous nudity—flesh or metal—online. Anything we post illustrates a broader point about our civil liberties. And sure enough, this particular naked statue did just that by serving as a touchstone for a conversation about community standards and censorship. Thousands of people read the blog and hundreds commented on Facebook, weighing in on the censorship controversy. That is, before Facebook removed the post. The irony here is pretty thick.

As we read Facebook’s , our busty statue pic was A-OK. Facebook is generally strict about human nudity, but the “Nudity and Pornography” standards also have a caveat:

Facebook has a strict policy against the sharing of pornographic content and any explicitly sexual content where a minor is involved. We also impose limitations on the display of nudity. We aspire to respect people’s right to share content of personal importance, whether those are photos of a sculpture like Michelangelo's David or family photos of a child breastfeeding.

The sculpture Holly snapped isn’t just of personal importance to her and other Kansans, it’s now of political importance too. And while art critics may or may not deem this particular bronze “a sculpture like Michelangelo’s David,” that’s precisely the analogy I used in my original blog post. The statue is at the swirling center of a community fight that implicates the First Amendment, obscenity, and even the proper use of the criminal justice system. The statue’s image belongs on Facebook, not only because it is of personal and political importance, isn’t obscene, and doesn’t violate community standards—but also because the statue is newsworthy. And Facebook should work hard to keep newsworthy content out of the censor’s crosshairs.

The Facebook Censors are Fallible

We decided to appeal Facebook’s determination that our blog post didn’t fit within community standards, just like any user might. And… we immediately hit a brick wall. The takedown notice informed us an łÔąĎÖ±˛Ą post had been removed, but didn’t exactly invite a conversation about it:

There was no “appeal” button, and we were unable to find a page where we could report or challenge the post’s deletion. The best option appeared to be a generic Facebook content form, designed to receive any input at all about a “Page.” We got a response: a canned email informing us that Facebook “can’t respond to individual feedback emails.” Not exactly promising.

But we have an advantage most Facebook users don’t: We’re a national non-profit with media access and a public profile. So we tracked down Facebook’s public policy manager, and emailed him about our dilemma. His team was immediately responsive, looked into it promptly, and told us that the post was “mistakenly removed” (and then “accidentally removed again”). Here’s what Facebook wrote to us:

We apologize for this error. Unfortunately, with more than a billion users and the hundreds of thousands of reports we process each week, we occasionally make a mistake. We hope that we've rectified the mistake to your satisfaction.

Facebook then restored the original post.

It’s certainly reassuring that Facebook agrees our original post shouldn’t have come under fire and was not a violation of the Community Standards. Unfortunately, the post was unavailable all weekend as we scrambled to figure out how to bring the mistaken deletion to Facebook’s attention. That’s a big hit in the fast-paced social media world.

More unfortunately, our ultimate success is cold comfort for anyone who has a harder time getting their emails returned than does the łÔąĎÖ±˛Ą. It’s unlikely that our experience is representative of the average aggrieved Facebook user. For most, that generic form and the canned response are as good as it’s currently going to get.

My colleague Jay Stanley has highlighted the dangers of corporate censorship before here on the pages of Free Future. He argues that as the digital world steadily eclipses the soap box as our most frequent forum for speech, companies like Facebook are gaining government-like power to enforce societal norms on massive swaths of people and content. A from our colleagues in illustrates how heavy-handed censorship is as bad a choice in business as it is in government. Fortunately, Facebook is generally receptive to these arguments. With Facebook’s mission to “make the world more open and connected,” the company is clearly mindful of the importance of safeguarding free speech.

But like all censors, its decisions can seem arbitrary, and it also just . If Facebook is going to play censor, it’s absolutely vital that the company figure out a way to provide a transparent mechanism for handling appeals. That’s particularly true when censorship occurs, as it so frequently does, in response to objections submitted by a third-party. A complaint-driven review procedure creates a very real risk that perfectly acceptable content (like…you know, images of public art) will be triggered for removal based on the vocal objections of a disgruntled minority. A meaningful appeals process is, therefore, beyond due.

More fundamentally, this incident underscores why Facebook’s initial response to content should always err on the side of leaving it up, even when it might offend. After all, one person’s offensive bronze breast is also one of Kansas’ biggest current media stories.

That a bronze sculpture in a public park in Kansas ran afoul of the nudity police shows that Facebook’s censors could use some calibration. And when they misfire, as they did here, there must be a process in place to remove the muzzle.